Zero-Shot Learning: Everything You Need to Know

.avif)

.avif)

Imagine an AI that doesn’t need mountains of labeled data to recognize something new. Sounds futuristic, right? Well, that’s exactly what zero-shot learning (ZSL) brings to the table.

Instead of relying on endless training examples, ZSL enables AI models to identify and classify new concepts they’ve never seen before with no specific training required. That means they can tackle fresh tasks, adapt to new products, and even enter different markets without constant retraining. This not only saves time but also slashes data collection and annotation costs while making AI more flexible and powerful.

In this article, we’ll break down how ZSL works, the challenges it faces, and where it’s making an impact including with real-world examples to bring it to life. Let’s get started!

Zero-shot learning (ZSL) is a machine learning technique that enables AI models to handle tasks or recognize objects they’ve never encountered before. Instead of needing direct training for every possible scenario, ZSL allows a model to make sense of new situations by drawing on what it already knows and making logical connections.

Think of a model trained to recognize animals, but with no prior exposure to zebras. Normally, it wouldn’t know what to do with an image of a zebra. But with ZSL, it can still figure it out. If the model understands general animal traits—like having four legs, living in the wild, or having distinct patterns—it can use a given description, such as “a horse-like animal with black and white stripes that lives in African grasslands,” to make an educated guess. Even without having seen a zebra before, it can connect the dots between the description and its existing knowledge of animals to correctly classify it.

This ability to infer and adapt without direct training is what makes ZSL so powerful. It allows AI to handle new challenges dynamically, rather than being limited by the data it was initially trained on.

Zero-shot learning (ZSL) operates through a two-stage process: training and inference, and relies on three key elements: pre-trained models, additional information, and knowledge transfer.

At its core, ZSL depends on pre-trained models that have already learned from vast amounts of data. These models provide a strong base of general knowledge. For example, GPT models process language, while CLIP bridges the gap between images and text. Instead of starting from scratch, ZSL builds on this existing knowledge to handle new concepts.

Since ZSL models need to recognize things they’ve never explicitly seen before, they rely on additional information to make sense of new data. This could come in the form of text descriptions, specific attributes or features, word associations, or vector representations. These inputs help guide the model in making educated guesses about unfamiliar concepts.

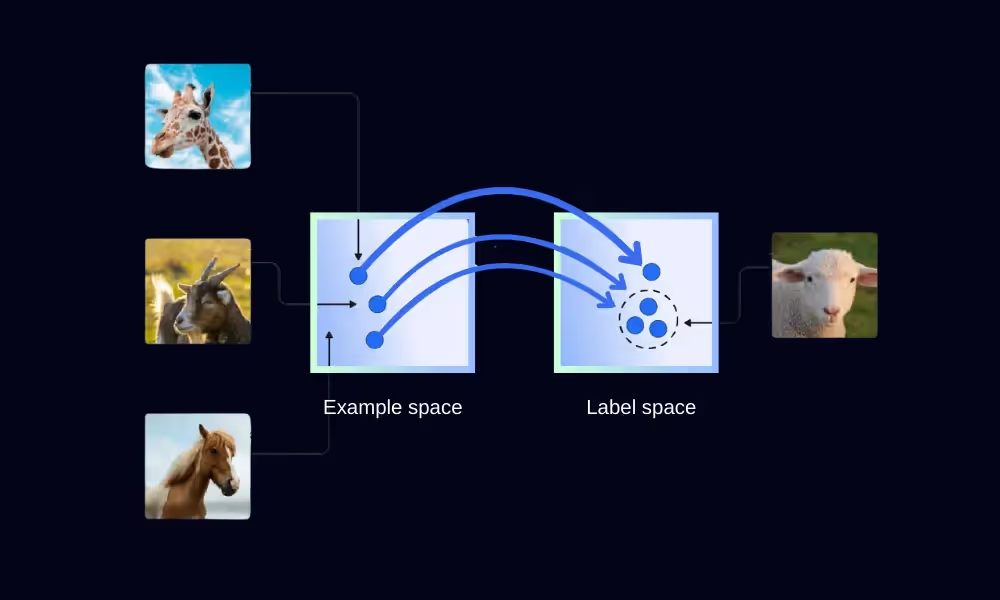

To bridge the gap between what’s known and what’s new, ZSL maps both familiar and unfamiliar classes into a shared semantic space where they can be compared. This is where some clever techniques come into play:

ZSL unfolds in two key phases:

Here’s how the inference process works:

This dynamic approach enables ZSL models to recognize an ever-expanding set of concepts over time, simply by using descriptions, attributes, or other semantic information—no extra labeled training data required.

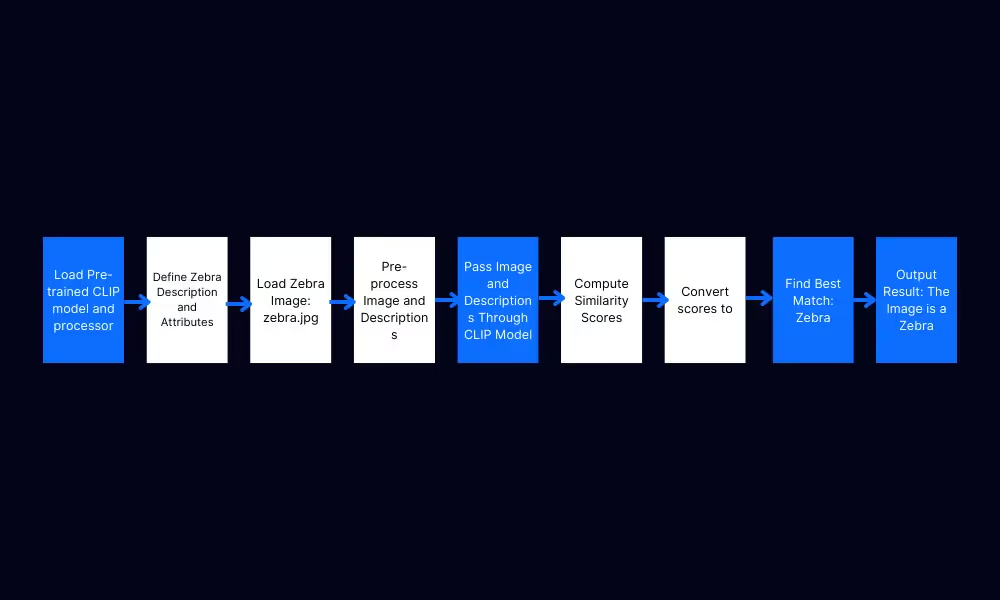

Let’s see zero-shot learning in action with an example. Imagine we're using CLIP, a model pre-trained on a vast dataset of image-text pairs. This model has broad knowledge about animals but has never been specifically trained on zebras.

Here’s where auxiliary information comes in. The model is given text descriptions like: “A horse-like animal with black and white stripes that lives in African grasslands.” It also receives key attributes such as “has four legs,” “has stripes,” and “lives in the savanna.” These details serve as the bridge between what the model already knows (general animal traits) and the unknown category (zebras).

Next, the model maps both familiar animals—like horses and tigers—and the unseen zebra into a shared semantic space, where they can be compared. By encoding the description “black and white stripes, horse-like” in the same way it processes known animals, the model can analyze similarities.

Instead of guessing randomly, the model calculates similarity scores between the zebra’s description and the characteristics of other animals it has learned about. Since the description aligns most closely with what it knows about horses (body shape) and tigers (stripe patterns), it correctly identifies the zebra—even though it has never explicitly learned what a zebra is.

This ability to generalize from known categories to entirely new ones is what makes zero-shot learning so powerful. It’s not just about animals; this same principle applies across industries, from medical diagnostics to customer support automation, where AI needs to handle new scenarios without retraining.

Zero-shot learning (ZSL) and few-shot learning (FSL) are both techniques that help AI models handle new tasks or recognize new objects, even when there’s little to no labeled data available. However, they work in different ways and are suited for different scenarios.

If you have no labeled data at all, ZSL is the way to go. It’s ideal when new categories, tasks, or products emerge, and there’s no time or resources to manually label examples. For instance, an online store that frequently adds new product categories can use ZSL to classify items based on descriptions alone—without needing human input. This makes ZSL a flexible and scalable solution when gathering labeled data is too expensive, slow, or impractical.

On the other hand, FSL shines when you can provide just a handful of labeled examples (usually 1–5) and need the model to learn quickly while maintaining accuracy. Imagine a chatbot suddenly encountering a new type of customer query, such as “How do I cancel my subscription?” Instead of retraining the entire model, FSL allows it to learn from just a few labeled examples and accurately classify similar questions moving forward.

This makes FSL particularly useful for scenarios where precision is key, like customer support, fraud detection, or medical imaging—where even a small mistake could have significant consequences.

Both techniques help AI models adapt to new challenges, but the right choice depends on how much labeled data is available and how critical accuracy is for the task at hand.

Zero-shot learning is reshaping the way AI models tackle new challenges by eliminating the need for massive labeled datasets. Instead of requiring extensive retraining, ZSL allows models to classify, predict, and reason about unfamiliar data simply by leveraging descriptions, attributes, and prior knowledge. This makes it an invaluable tool across various industries, from automating text classification and enhancing image recognition to improving medical diagnostics and delivering personalized recommendations.

Of course, ZSL isn’t flawless. Like any AI technique, it can struggle with complex concepts, nuanced language, or highly specific domains where context matters. However, its ability to adapt to new tasks dynamically—without human-labeled training data—makes it a game-changer, especially in environments where speed, scalability, and cost-efficiency are essential.