Setting Standards for Copilot Agent Development

.webp)

.webp)

Democratisation of AI has unlocked unprecedented opportunities for innovation. Once confined to IT and core operational functions, AI is now empowering teams in marketing, HR, finance, and beyond to drive efficiency and creativity. Among the most transformative developments is the ability for organisations to design and deploy their own AI-powered agents through platforms like Microsoft’s Copilot Studio.

This shift from centralised AI deployment to a more decentralised, user-driven approach brings with it significant benefits—but also introduces new risks. The proliferation of custom Copilot agents, tailored to unique organisational needs, creates challenges in ensuring consistent standards, ethical use, and operational safety. The potential for misuse, whether intentional or accidental, is heightened as the barriers to creating these agents lower.

The harms caused by poorly governed AI systems are well-documented. High-profile cases of unintended bias, misinformation, and ethical lapses have highlighted the need for proactive governance. Regulation, such as the EU AI Act, has already arrived, introducing provisions for General Purpose AI (GPAI) systems like Copilot.

Organisations without robust governance structures now face the risk of penalties and fines, particularly when these systems are deployed in sensitive environments or high-risk use cases, where the potential for harm is amplified. This makes it imperative for businesses to take proactive steps in establishing internal accountability frameworks that ensure compliance and mitigate risks. In this article we will take a look at some of the practical framework for establishing governance structures specific to Copilot agents. Drawing on our experience across industries and organisations of all sizes we have developed a DIY Governance for setting standards for Copilot agent development, you can download this guide at the end of the article.

A successful working or steering group is characterised by two key traits: A comprehensive understanding of AI adoption across the organisation and an appreciation of the potential risks and opportunities.

What Should Be Included in a Working Group? These groups typically consist of stakeholders from various parts of the business who interact with or oversee new tools and strategies. In the context of Copilot, this group would act as a central body to ensure alignment, reduce duplication in agent development, and foster responsible innovation across the organisation. AI champions are vital contributors to the success of AI implementation and play a valuable role in the steering group. Identified during human change processes or adoption accelerators, one AI champion should form a key part of the steering group. They provide practical insights into how AI is deployed, highlight challenges users face, and ensure governance policies align with the actual needs and workflows of AI users, increasing buy-in and adherence.

In terms of seniority, the working group should report to middle-to-senior rank professionals, with a clear escalation path to the C-suite. For AI initiatives, including governance of Copilot agents, the Chief Data Officer or Chief Technology Officer typically serves as the key point of reference. In regulated industries such as financial services, this responsibility often falls under the purview of the Chief Risk Officer, given their focus on managing compliance and mitigating potential AI-related risks.

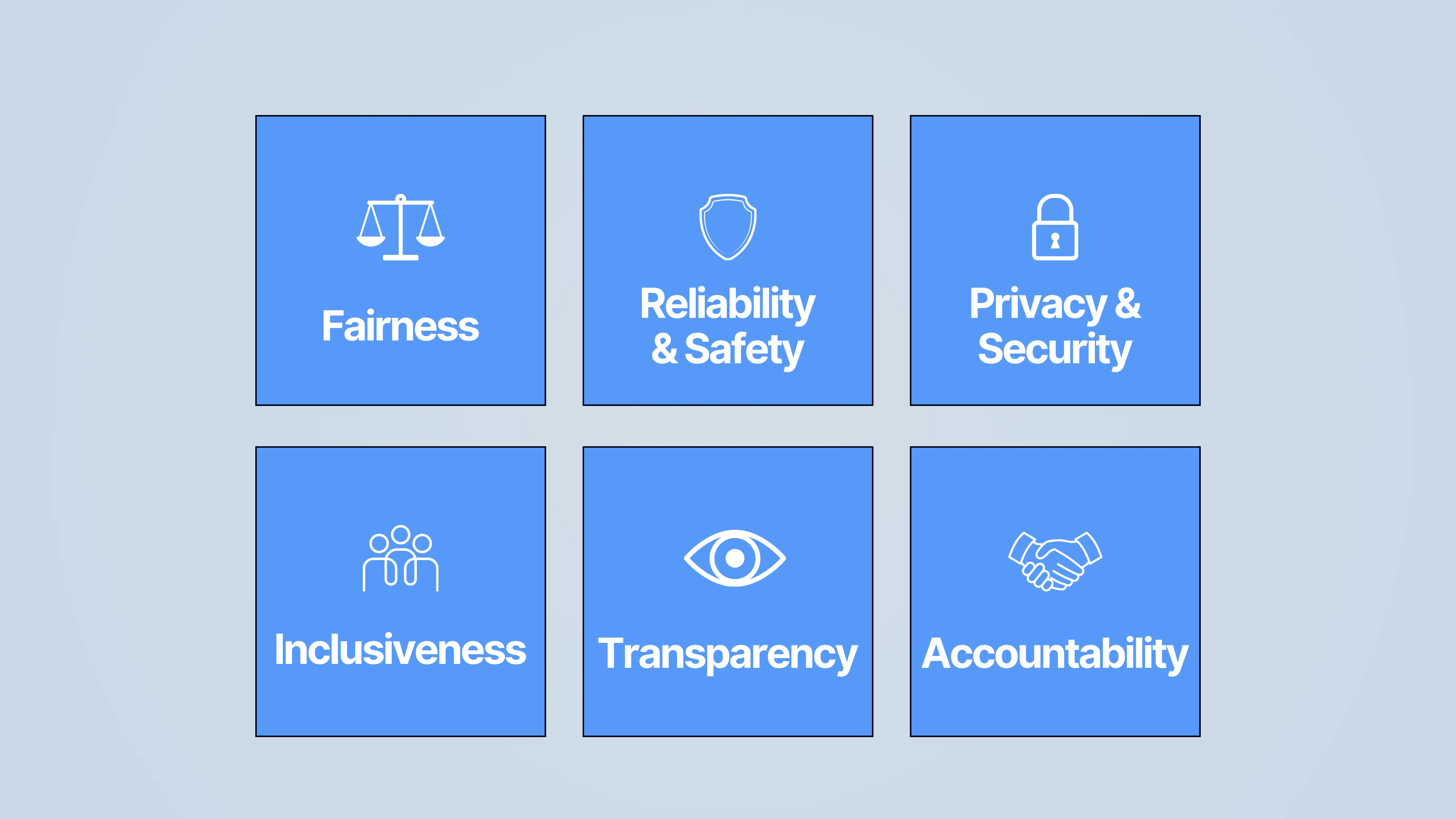

What Principles Should a Working Group Adopt? The foundation of a successful working group is a robust set of AI ethics or governance principles. For organisations deploying Copilot, adopting Microsoft’s Responsible AI Principles provides a practical and industry-aligned framework. These principles emphasise fairness, reliability, safety, privacy, security, inclusiveness, transparency, and accountability, ensuring that AI systems are designed and used responsibly. They align closely with the OECD AI Principles and the provisions of the EU AI Act, offering a cohesive approach to governance.

The working group has a number of other considerations, such at awareness building, community building and writing policies, you can find all of this including template documents in the free download DIY Governance.

What is and is Not Considered AI? Defining what qualifies as AI within an organisation can be contentious. This challenge becomes even more nuanced when considering Copilot agents created in Copilot Studio or automation tools built with Microsoft Power Apps, as there functionality often blurs the traditional lines between AI and advanced automation.

These tools enable teams to design highly customised solutions, from conversational agents to workflow automations, which might not traditionally be labeled as "AI" but operate within the same governance scope. Stakeholders may attempt to exclude such systems from being classified as AI to avoid governance requirements. However, this can lead to fragmented oversight and missed risks. Adopting a rigid, static definition in this context could also hinder governance adaptability as these tools evolve. We recommend adopting a widely recognised and future-proof definition, such as the OECD definition of AI, which states:

“A machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy.”

What Key Information Should Be Catalogued? Given the unique capabilities of Copilot agents, registering their development and deployment requires a tailored approach. While detailed technical metadata, such as neural network weights, may not be practical for governance purposes, certain high-level attributes should always be logged.

Where to Locate AI Within the Company? AI systems, including Copilot agents, are often embedded across a wide range of functions and departments. Discovering their presence involves investigating multiple data sources: Privacy Risk/Data Protection Impact Assessments: Systems leveraging sensitive or personal data, which may include custom Copilot agents integrated into workflows, particularly in HR or finance. Infosec Reports: These often capture third-party models used across the organisation. Microsoft Purview & Developer Repositories (e.g., GitHub/GitLab): Engineering teams may have repositories of Copilot agents and workflows built internally via Copilot Studio. Given the proliferation of AI, some teams may feel the governance challenge is overwhelming. However, focusing on an initial risk assessment to prioritise high-impact areas will make the process more manageable.

Going Forward: Voluntary or Mandatory Registration? To create a centralised repository of AI systems and Copilot agents, businesses should begin with a voluntary registration process. This can be coupled with awareness-building activities and tools like Microsoft Viva to engage teams and encourage participation. Over time, as the process matures and executive sponsorship grows, organisations can transition to a mandatory registration system. This ensures comprehensive coverage, especially as Copilot agents become more integral to business operations.

Which Risks Should Be Considered?

These categories fall under the umbrella of Governance Risks, which are risks associated with projects centered around management, compliance, and appropriate processes. In the context of AI, these are driven and influenced by risks inherent to the technology-also called Technical AI Risks (e.g., AI Bias, AI Transparency, etc.)-which are better measured using appropriate methodologies and software.

Therefore, the drivers causing, for example, reputational damage are a form of AI Risk (e.g., Bias). If this risk, at the AI level as well as at the Governance level, is not managed properly then businesses may encounter consequences in terms of business performance (e.g., having to settle a lawsuit).

The main takeaway is this: properly managing the AI Risks has a direct correlation with business performance.

Operational and Strategic Risks? Risks related to AI systems that are not typically considered Inherent Risks- such as bias, transparency, or ethical concerns- often fall under Operational Risks or Strategic Risks. These arise from how AI interacts with an organisation’s existing infrastructure, workflows, and workforce. Such risks are contextual and tied to the broader adoption of AI, rather than being intrinsic to the AI system itself. For example, integrating Copilot agents may disrupt existing IT landscapes, introduce new dependencies on data flows, or impact organisational culture as employees adapt to new processes and tools.

Risk Amplification Factors & Mitigation by Design

To effectively mitigate risks, certain factors must be considered throughout the lifecycle of Copilot agents, from ideation to deployment. These factors not only influence the level of risk but also align with how risks are assessed and determined under the EU AI Act for GPAI’s. Addressing these factors during development ensures that risks are managed proactively and in alignment with regulatory requirements.

How should businesses risk grade? What risk tiers should be used? Each registered AI use case, including Copilot agents, should have an overall risk grade assigned, broken down into contributing factors such as Financial, Reputational, Ethical, and Regulatory risks. This grading system helps prioritise risk management efforts and informs governance strategies.

The complexity of the underlying technology (e.g., neural networks vs. decision trees) should not be the primary focus in the initial risk assessment. Instead, prioritize the end-use of the application. For instance, a simple algorithm used in a sensitive context like resume screening carries greater inherent risk than a complex neural network used for low-stakes tasks like spam filtering.

The initial AI risk assessment should focus on estimating the Inherent Risk—the natural level of risk before implementing controls or mitigations. This step can often be conducted manually or tacitly, relying on the expertise of the working group and basic documentation processes.

Effective AI governance does not exist in isolation. It intersects deeply with Data Governance, forming an integral part of broader frameworks like IT Governance and Corporate Governance. AI systems, such as Copilot agents, rely on high-quality, well-governed data to function effectively, making the alignment between AI and Data Governance critical for success.

The working group plays a central role here, as it is responsible for ensuring alignment between AI and Data Governance policies, bridging the gaps between technology, data practices, and corporate objectives. Data governance in AI systems, including those developed in Copilot Studio, is critical to ensuring compliance, security, and effective performance.

This article highlights the critical importance of establishing robust AI Governance, particularly for General Purpose AI systems like Copilot. These foundational steps equip your organisation to lay the groundwork for responsible and effective AI adoption, you can download the e-guide for DIY Governance here.

At Digital Bricks, we offer two distinct partnership models for AI Governance. You can engage with us on a hybrid basis, where we collaborate closely with your internal teams, providing expert guidance and support to enhance your existing governance structures. Alternatively, choose a fully managed solution, where we take full responsibility for implementing and overseeing comprehensive governance frameworks, ensuring seamless compliance, risk management, and operational efficiency.

Both options are designed to deliver scalable, tailored solutions that align with your organisational needs and strategic goals. We empower businesses to connect teams across every stage of the AI lifecycle, from ideation to deployment , ensuring transparency, accountability, and innovation. To learn more about how we can help your organisation implement and scale AI Governance effectively, schedule a call with one of our experts today at info@digitalbricks.ai.