Key Takeaways from the AI Pact Webinar on Article 4 of the EU AI Act

.avif)

.avif)

Yesterday, the AI Pact, supported by the EU AI Office and The European Commission hosted its third AI Pact webinar on AI literacy the aim of the webinar was to help organisations learn more about the approach of the European Union towards Article 4 of the AI Act and discover the ongoing practices of the AI Pact organisations. Article 4 of the AI Act, which requires providers and deployers of AI systems to ensure a sufficient level of AI literacy, entered into application on 2 February 2025. In this article we will break down some of the webinars key takeaways.

You may be thinking, “Wait. Does the EU AI Act even apply to my company?”.

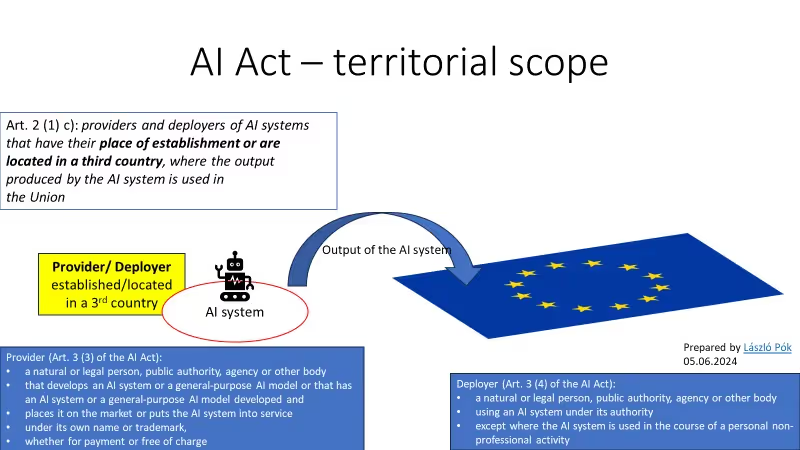

Good question. Like the GDPR, the Act asserts significant extraterritorial jurisdiction. Regardless of a company’s location, the Act applies if a company is developing, selling, importing, manufacturing, distributing, or deploying AI that touches EU residents.

Here are a couple of examples of the Act’s breadth:

The Act addresses many circumstances beyond these examples.

In sum, assuming the Act does not apply to you as a non-EU company is a big mistake that could lead to steep regulatory penalties, reputational damage, and increased legal risk. Look closely at your AI systems before making a decision.

So what’s this AI literacy requirement about?

“AI literacy” refers to the knowledge and understanding required to effectively use, interact with, and critically evaluate AI systems.

The AI literacy requirement aims to enable responsible AI deployment and usage within organisations. Although there are no direct fines for non-compliance, a failure to create sufficient AI literacy may increase penalties for other violations.

More importantly for your AI program, a lack of AI literacy will increase the likelihood of violations occurring. Untrained employees may inadvertently misuse AI, leading to unintended harms or non-compliance with broader governance policies.

.avif)

Let's break it down now. What do you actually need to do?

The Act does not prescribe any format or method for creating AI literacy, but making a good faith effort will likely go a long way with regulators. The easiest way to do this to follow best practices already established in other compliance areas.

Start with these two prerequisites to AI governance:

1. Build an AI Map: Similar to a personal data map, you need to map where AI is being used in your company and by whom. Talk to your IT teams, survey your employees, and review a list of the applications you are paying for. Discover what’s in your tech stack to build a program that fits your organisation.

2. Create an AI Policy: A key part of every AI literacy program should be educating your teams on your company’s guidelines and rules for using AI – just like your privacy policy is a key part of your privacy training.

Once you have these tools in hand, here is how I would approach increasing AI literacy within an organisation:

I know this sounds like a lot. AI has the potential to make us more productive, but achieving the promise of AI will require investing in people and processes. Take it one step at a time and you will be amazed with what you can accomplish in a few months.

.avif)

On February 20, 2025, the AI Pact, in collaboration with the EU AI Office and the European Commission, hosted its third webinar on AI literacy. The session aimed to help organisations better understand Article 4 of the EU AI Act, which came into effect on February 2, 2025. This provision mandates that providers and deployers of AI systems ensure a sufficient level of AI literacy among their staff and users, equipping them with the knowledge and skills necessary to responsibly develop, deploy, and interact with AI technologies.

One of the key announcements in the webinar was the introduction of a "living repository"—a resource compiling AI literacy practices from AI Pact members. This initiative is designed to foster learning and exchange by showcasing real-world AI literacy initiatives. However, the EU AI Office emphasised that merely adopting these practices does not guarantee compliance with Article 4; organisations must ensure their own approaches align with regulatory expectations.

The webinar featured interactive discussions where AI Pact members shared strategies for promoting AI literacy within their organisations. These discussions provided valuable insights into the practical implementation of literacy programs across different sectors. However, one crucial point remained unresolved—the precise definition of AI literacy in the context of Article 4. While the session provided examples of best practices, it did not explicitly clarify the terminology or establish a concrete framework for compliance.

As organisations work toward meeting AI literacy requirements, it remains essential to stay informed on further guidance from the EU AI Office. Future clarifications and evolving best practices will likely play a significant role in shaping AI literacy initiatives across the European Union.